Split tests allow you to discover which version of an email is most effective. You can create different variations on an email (three versions with different subject lines, for example) and send them out to your contact list at random, in equal numbers. The statistics that are returned let you see which version has the highest open or click-through rate. Lead Liaison also supports automatic split testing, in which the “candidate” email versions are sent to only a small proportion of your contact list, and the “winner” version is then sent to the remaining contacts in the list.

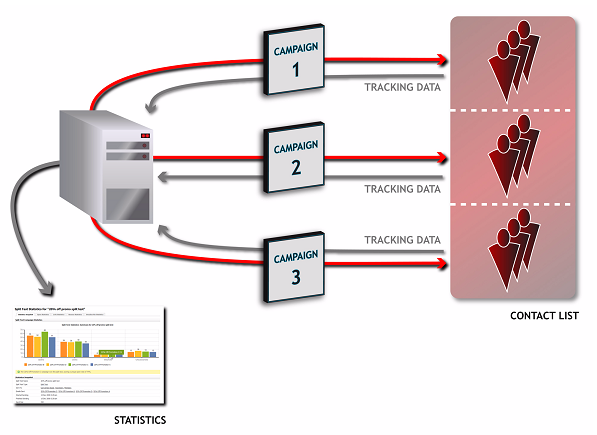

Split testing, also known as “A/B testing”, is a scientific method of testing your email campaigns’ effectiveness using statistical analysis. When you perform a normal campaign send, you send one email to your whole contact list. You can then see how well it performed using the email software’s statistical analysis tools. However, when you perform a split test you “split” your contact list into different parts and send a different email campaign to each part. Then, you can see how well each email performed and select the most effective.

Split testing is essentially a competition between email campaigns, in which the losers are discarded and the winner is used in future campaigns. You can select the criteria on which to judge the winner (open rate or click-through rate) and the email software tracks each email’s performance and decides the winner.

Split tests are usually performed on variations of the same basic email campaign, not on vastly different emails. For example, you might split test two emails that are identical except for their slightly different subject lines, in order to see which variation is opened most often. However, you would not split test one email advertising a seasonal discount on store merchandise and another offering a free holiday; this would not produce meaningful results.

The term “A/B testing” suggests a split test must be a competition between two emails. However, this is not the case with the email software, which allows you to create tests using any number of emails. This means that you can perform highly detailed and efficient split tests that compare multiple email variations simultaneously.

Lead Liaison supports both manual and automatic split testing.

Manual Split Testing:

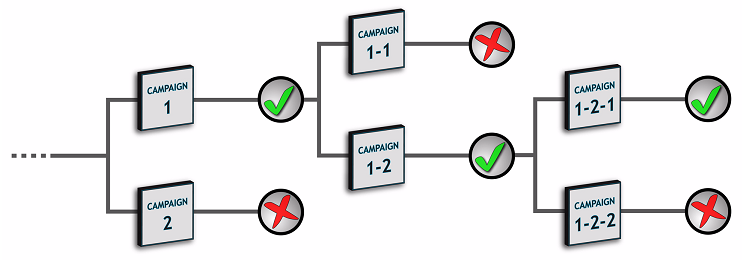

Once you have discovered the most effective email campaign, it’s up to you what you do next. You could use the winning email for your next campaign, send the winning email to another contact list, or perform further split tests on variations of the winning email (an example of which is shown in the following figure) in order to further refine its effectiveness.

Multi-Level Split Testing:

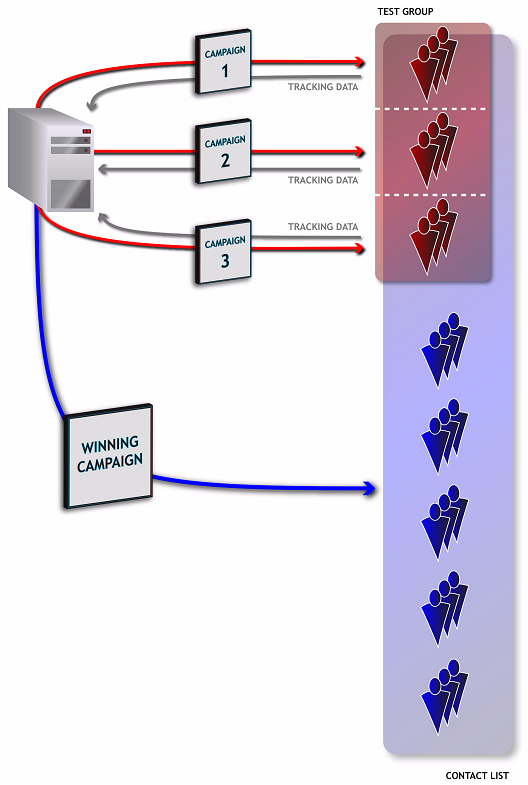

When you use automatic split testing, the test emails are sent to only a percentage of your contact list’s contacts (you define the percentage). You also define the test’s duration. The email software sends the test emails and tracks their performance. Once the test duration has elapsed, the email software declares a winner based on the criterion you selected, and automatically sends the winning email to the rest of your contact list.

Automatic Split Testing:

If you have not used split testing before, here are some tips that might be helpful:

Keep it simple. As with any scientific test, results are only meaningful if they compare two things that differ only in a single feature. For example, test two emails that differ only in their font colors, or two emails that differ only in their subject lines. Don’t test one email against another that has a different subject line, graphic design and body text; the results would not let you know which element is affecting performance. Lead Liaison lets you test an unlimited number of emails simultaneously. For the most informative results when testing multiple variations, create an email campaign for each variation or combination of variations.

Include a “control”. In scientific testing, a “control” is an element of the test that provides a base result against which other results can be compared. For instance, in a pharmaceutical test a section of the test group is given a placebo instead of the real drug. This allows the scientist conducting the test to exclude the influence of other variables on the results. In email split testing this means that you should always include the original version of the email, which has had no changes or variations applied to it. This is particularly important if you are split testing variations of an email campaign that you have used in the past. In order to accurately gauge the effectiveness of each new variation, you must compare it against the control email (which is sent at the same time as the variations) and NOT the email you sent in the past. If you compared the new variations’ performance against that of the email you sent in the past, your results could be affected by a multitude of variables (time of day the email was sent, the day of the week, the season, economic conditions, and many more) that would severely impair the comparison.

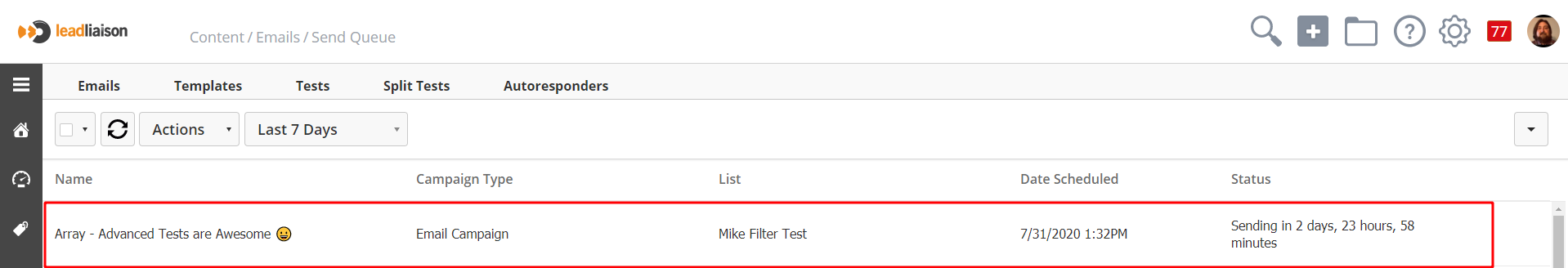

Click a test’s Pause link to stop sending the test. Click its Resume link to begin sending the test again. Note: These options display only when a test is currently being sent.

Click a test’s Send link to perform the test. Note: Before you send Best performing tests, you need to enable Split test campaigns in the Settings > Cron settings screen.

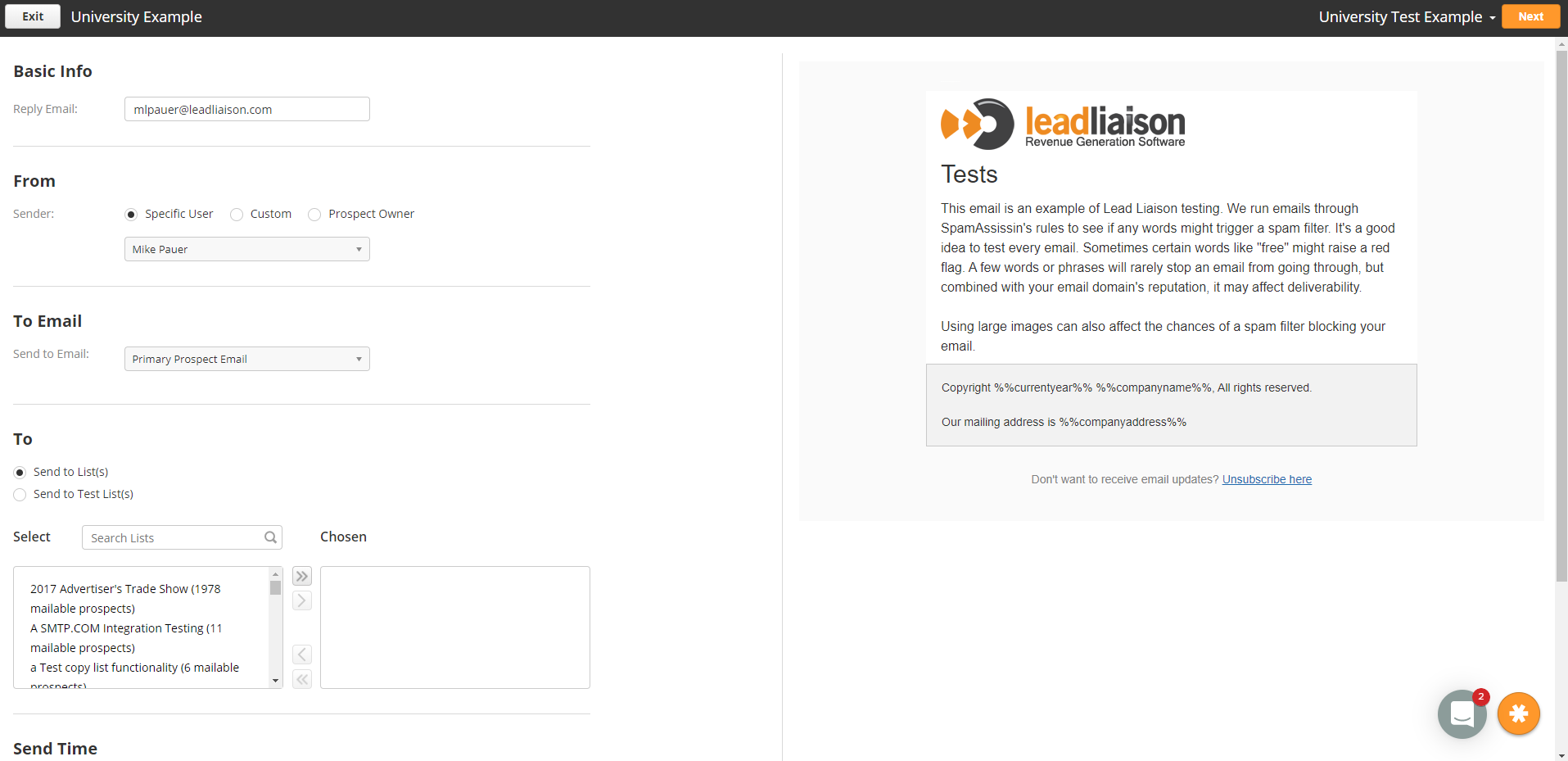

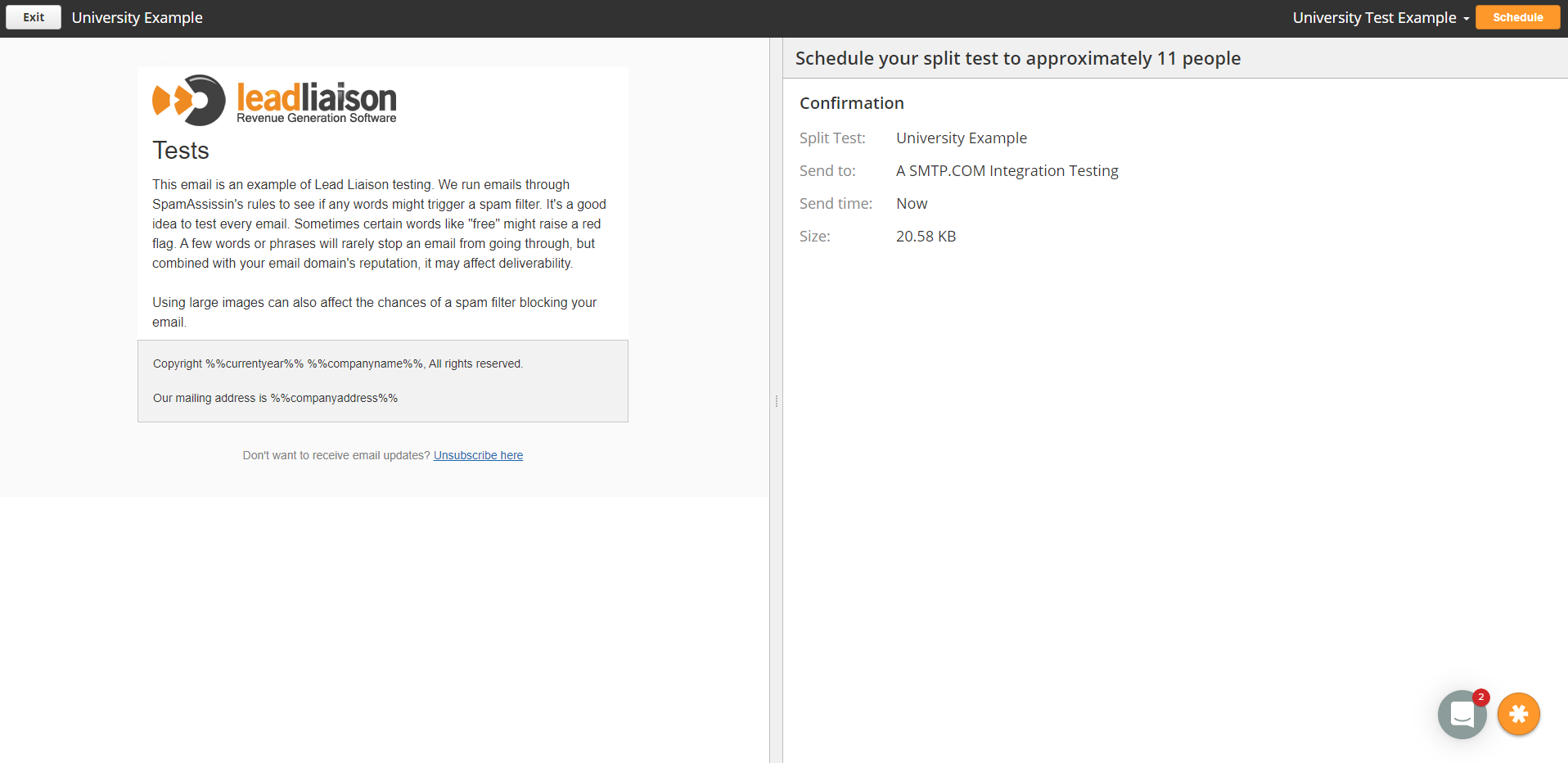

To send a split test to members of your contact lists:

Congratulations, your Split Test has been sent!

To view statistics about the split tests you have performed, including the winner of each test, click Reports > Emails in the navigation bar, You will be able to see all the campaigns that were part of the split test along with it's statistics. Note: This screen displays information about all campaigns, not just split tests.

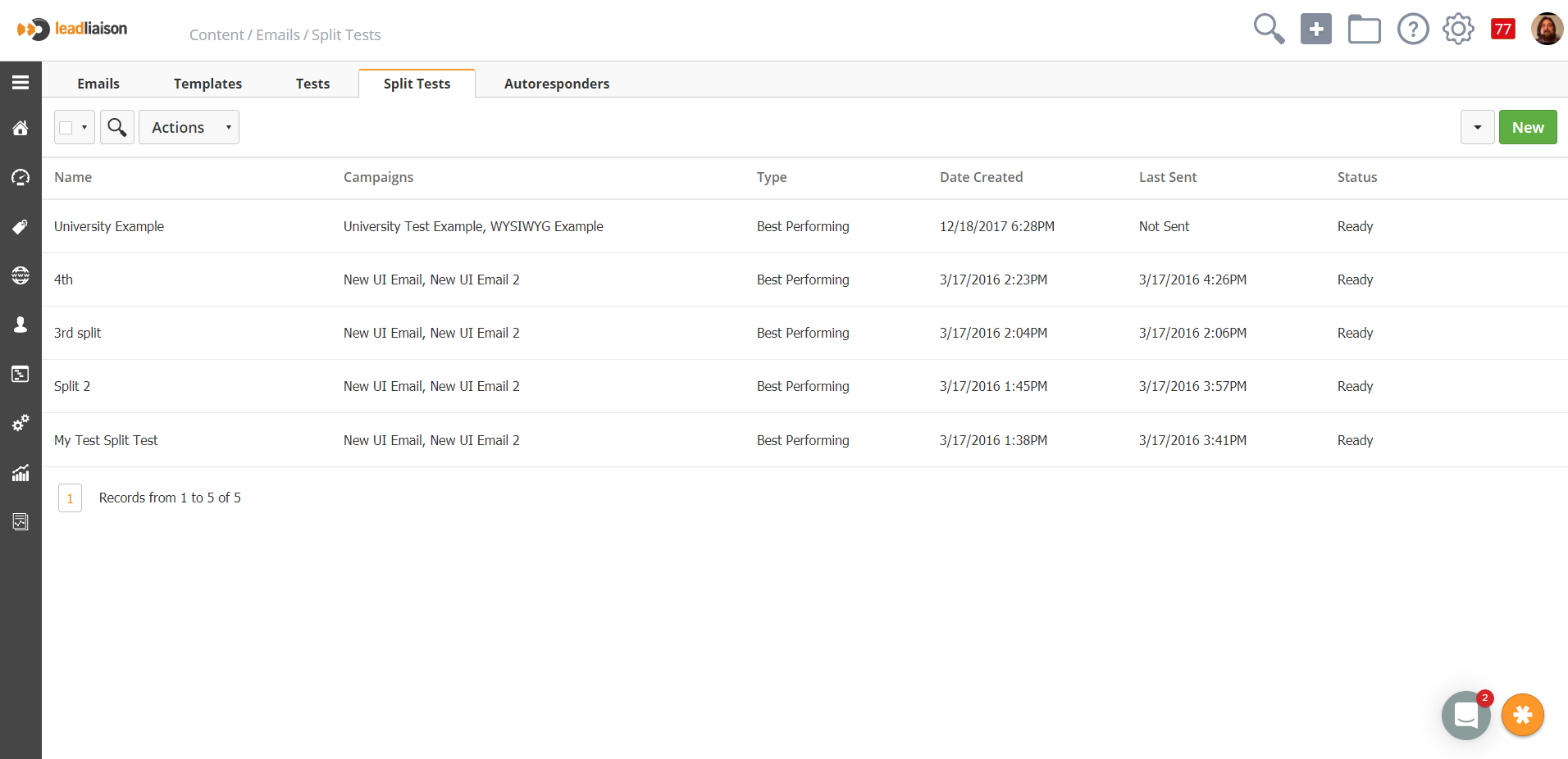

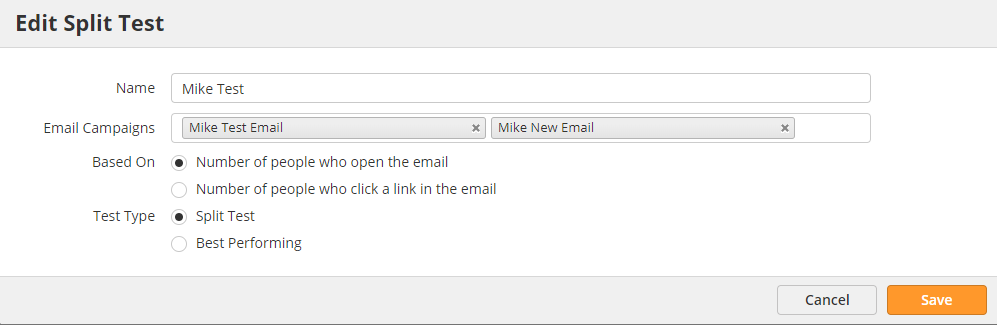

To create a new split test, click Content > Emails > Split Tests tab in the navigation bar. Click the New button. To edit an existing split test, click Split Tests in the navigation bar. Then click the relevant test’s Edit link. Note: This screen allows you to set up your test; you can choose the people to whom you want to send it later on.

Select Number of people who open the email to choose the email campaign that is opened most often.

Select Number of people who click a link in the email to choose the email in which a link is clicked most often (the one with the highest click-through rate).

Save = Click this to save your changes to the test and return to the View split tests screen.

Cancel = Click this to return to the View split tests screen without saving your changes.